Posted inGaming

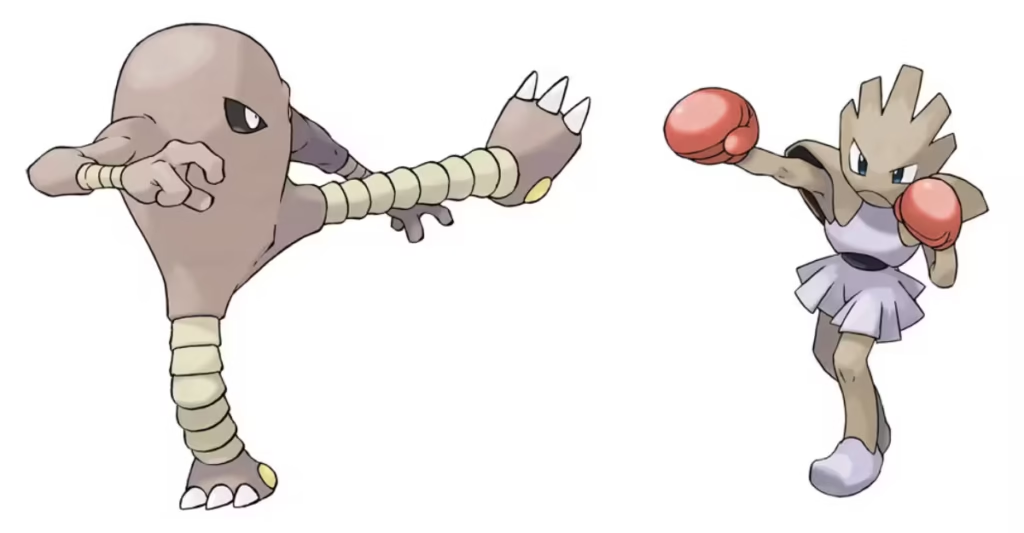

Hitmonlee vs Hitmonchan FireRed: Which Is Better in Pokémon FireRed & LeafGreen?

After you defeat the Fighting Dojo in Saffron City, the game rewards you with a choice: Hitmonlee or Hitmonchan. You can only pick one. That makes this one of the…